Which Machine Learning Potential to Choose?

Lost in the sea of all machine learning potentials? Our overview and recommendations based on balanced analysis are just out in Chemical Science. In brief, kernel methods are a better choice for not too large data in terms of both accuracy and speed. NNs such as ANI type are better for huge data sets. We provide a platform based on MLatom, which we recommend to use for fair evaluation of other potentials on equal footing.

· Max Pinheiro Jr, Fuchun Ge, Nicolas Ferré, Pavlo O. Dral, Mario Barbatti. Choosing the right molecular machine learning potential. Chem. Sci., 2021, accepted. DOI: 10.1039/D1SC03564A.

Constructing the potential energy surface (PES) for a molecule is a hassle. It requires a huge amount of computational resources and our precious time through quantum chemical calculations. This limits the PES-related applications.

Fortunately, machine learning potentials (MLPs) take advantage of the rapid developments in the field of machine learning and data science to enable more computationally efficient construction of the molecular PESs for fast predictions in applications like geometry optimization and transition-state structure search, vibrational analysis, absorption and emission spectra simulation, etc. We recommend to read this excellent collection of related reviews in a special issue of Chem. Rev.

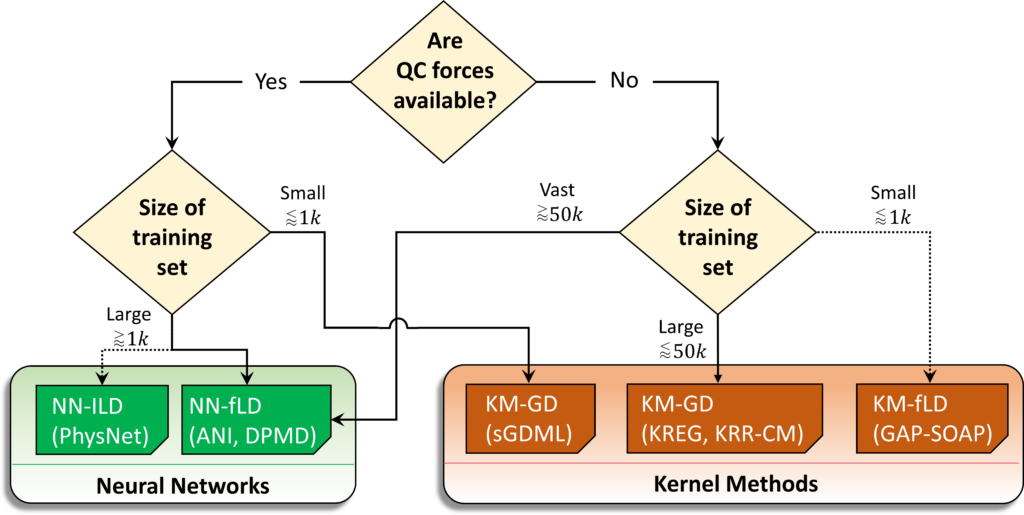

However, there are so many types and implementations of ML models on the shelf for MLPs that it is easy to get lost. As the figure shows, MLP models mainly have their differences in the algorithms and the descriptor.

For the algorithms, kernel methods (KMs) and neural networks (NNs) are the two predominant types. KMs allows you to determine its parameters analytically once the hyperparameters are determined, while NN models will need iterative optimization of parameters. For the descriptors, it may be global (describing the entire molecule) or local (describing only immediate environment around each atom). Local descriptors may be either fixed (determined beforehand) or learned by machine learning.

All these nuances will have an impact on MLP’s real-world performance. Finding the kind that suits your needs becomes a big problem for users, especially those who just want a plug-and-play solution to do their research.

To save lives from the decidophobia of MLPs, we benchmarked the performances on MD17 dataset of a careful selection of MLP models that covere all categories shown in the figure above to give an insight into choosing a proper type of MLP (we would like to test all of MLPs on the market, but due to their sheer amount this task is unsurmountable!). These models are:

- KREG and pKREG

- KRR-CM (kernel ridge regression with the Coulomb matrix)

- KRR-aXYZ (aXYZ – aligned Cartesian coordinates, used as a “null” model)

- ANI

- DPMD

- GAP–SOAP

- PhysNet

- sGDML

All of these models were tested through the MLatom 2 platform (see the previous post or the paper for detail) to automatically generate learning curve data.

We first examined the model performance with energies as the only training property. Taking ethanol as the in-depth molecule examined, we gathered the information of model accuracies and the timings (both training and prediction) of 8 MLP models with a dense grid of training set sizes:

From the results plotted above, we can see the clear win for KM models (except KRR-aXYZ which acts as a “null” model for MLPs here). KM models outperform NN ones in accuracy and efficiency. The GAP-SOAP model, which uses KM with the local descriptor, gave impressive results on accuracy (despite the larger costs in time), while the KREG model and its permutational invariant variant (no pun intended) are most efficient. For making predictions with MLP models, we can find that the time cost of KM models goes up when the number of training points goes up, while it stay almost unchanged for NN models. This result is expected and reflects the nature of nonparametric models and parametric models to which KM and NN models belong.

Since often the forces (negative gradients of energy) information is also available for constructing MLPs, and it is important in many applications like molecular dynamics, we also benchmarked the model performance when learning both energies and gradients.

The extra information in gradients makes the gap between KMs and NNs much smaller. It is clear however, that here kernel methods such as sGDML and GAP-SOAP are more accurate, which can greatly reduce the required number of training data to achieve the target accuracy. And for very large training set sizes NNs even becomes more efficient, since the computational cost for KMs dramatically increases when force components are included.

For other molecules in the dataset, we also observed similar trends.

In general, we tried to make our results as reproducible as possible and that is why we provide the raw data of the benchmark results including sample input files on mlatom.com as well as all MLatom code and our scripts are open source. Update (2021-09-24): see our tutorial on how to run benchmarks with MLatom and reproduce our results.

The summary of our analysis is given at the top of this post. Basically, the choice MLPs mainly depends on what dataset you have: the availability of force information and the size of the training set. If you see the force information as more training points, when you have small number of training points you should choose KMs, while for larger training points NNs are preferred: It’s all about how many information you have in you data.

This work was published on Chemical Science, which is an excellent, truly open journal operating as diamond open access, and thus you as a reader do not need to pay money to access the article, while we as the authors do not need to pay for open access, for which we are very thankful! Click on the picture below to the read the full text.

0 Comments on “Which Machine Learning Potential to Choose?”